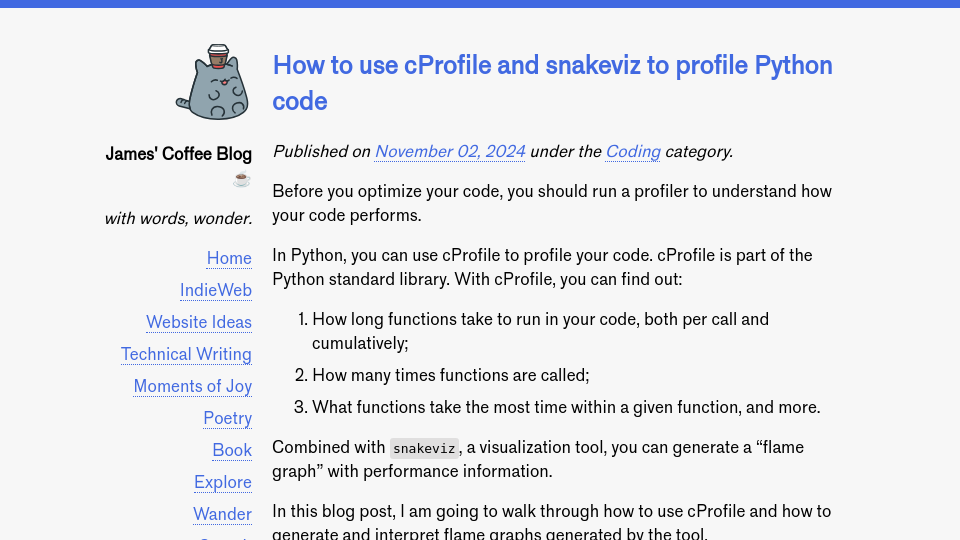

Before you optimize your code, you should run a profiler to understand how your code performs.

In Python, you can use cProfile to profile your code. cProfile is part of the Python standard library. With cProfile, you can find out:

- How long functions take to run in your code, both per call and cumulatively;

- How many times functions are called;

- What functions take the most time within a given function, and more.

Combined with snakeviz, a visualization tool, you can generate a “flame graph” with performance information.

Here is an example of such a flame graph:

In this blog post, I am going to walk through how to use cProfile and how to generate and interpret flame graphs generated by the tool.

How to run cProfile

There are two ways to run cProfile. You can run the tool on:

- Entire programs, or;

- Functions in a program.

To run cProfile on a full program, you can use the following command:

python3 -m cProfile -o output.out program.py

The -o output.out argument says that you want to save the output. This output can then be read by snakeviz to generate a flame graph.

To run cProfile on a single function, wrap the function you want to profile in a cProfile.run() call. Your function should be enclosed in strings:

import cProfile

cProfile.run("your_function(args, ...)", output="output.out")

If you don’t specify an output, cProfile will show profiling information in the console. I find the information hard to parse, and prefer to use snakeviz to visualize the results.

How to visualize profile results

In this tutorial, I am going to be walking through the profile for a short Python search index program. The program lets you find documents that match a search term. The program doesn’t call many functions, which will reduce the complexity of the final profile. We will not be optimizing the program since it is relatively barebones, but it will be useful to illustrate how a flame graph works. I recommend downloading the code and profiling it on your machine so you can explore snakeviz interactively as this guide progresses.

I am going to profile the whole program with the cProfile command from earlier. Then, we can visualize the results with snakeviz.

If you don’t already have snakeviz installed on your system, you can install it with:

pip3 install snakeviz

Then, you can open the profiler results:

snakeviz output.out

A browser tab will open up a flame graph that represents the results from the profiler:

There are two parts to the page:

- The flame graph, and;

- A table with information about function calls.

These two components can interact together. We’ll talk about how in the next section.

Reading and working with a flame graph

The cProfile flame graph should be read from the top down.

Each box label has a few components. Here is an example:

idx.py:19(transform_text) 0.732s

This tells us that the function transform_text is called in the idx.py file on line 19. In total, all invocations of the function on line 19 took 0.732s when the program run.

Every box is a function. If a box is under another box, it means the function the box represents is being called by the box above it. In the example above, idx.py:19(transform_text) is nested below idx.py:22(build_index). This tells us that transform_text is called by build_index.

With this information, we can start to evaluate what parts of the program take the longest to run. The bigger the box, the longer the part takes to run.

Below the flame graph, there is a table.

This table has a few columns:

- ncalls: the number of times a function is called.

- tottime: total time spent running a function, excluding the amount of time taken to run sub-functions.

- percall: the tottime / ncalls.

- cumtime: total time spent running a function and all of its subfunctions.

- percall: the cumtime / ncalls.

- filename:lineno(function): the signature that tells us the file name, line number, and function name associated with an entry in our profiler results.

You can sort the columns by clicking on a column header. This is especially useful for finding out:

- What functions are called the most, and how many times they are called.

- What functions take the most time to run per call.

In the example above, our text transformation function takes a substantial portion of the 1.49s total program execution time. We can investigate the function more by clicking on the box.

If a function label isn’t visible in a box, hover over the box and see the label on the left side.

Clicking a box has a secondary effect: the table below narrows its focus to only the function corresponding with the box you clicked on and all of its sub-functions. This means that you can sort and search the columns to find the functions that take the most time to run or are called the most within a given function.

This is useful for better understanding how different sub-functions contribute to the performance of the parent function.

If you clicked on a box and want to go back to seeing the full chart, click “Reset Root”.

Analyzing a flame graph

A flame graph doesn’t tell you what to fix, but it can give you hints on where to look.

As you get started, I recommend looking for the functions that take the most time. Is a function taking surprisingly long? If so, narrow in to the function to see what’s going on. You may notice an expensive sub-function is called a lot. If the sub-function could be cached, you could add an LRU cache to improve lookup times after initial calculation

If a function is being called unnecessarily (i.e. it’s being called in a condition in an if statement and the body of the if statement, or the function is called before a break statement when it could be called after), you could adjust your logic appropriately.

If you are relying on a dependency that takes longer than you need to meet the requirements of your program, you could consider looking at alternatives that are faster. For example, orjson is faster than the out-of-the-box json library; pyromark is faster at processing markdown than other libraries.

I was recently working on a search engine in Python. I wanted to make it faster. I ran cProfile on my code and quickly noticed some things that stood out. First, I sorted functions by ncalls to see what I was calling the most, and how the time contributed to my program runtime. I found that I was making 16,000,000 calls to the dictionary.get() function.

While dictionary.get() calls are fast, running lots of them will be slow. I just tested running 16,000,000 dictionary.get() calls. It took 0.65s to run. Given I wanted my search engine to return results in < 10ms, this was clearly a problem.

I went back to my code and started to think about ways I could reduce the number of calls. I found things like:

- Using get() calls when I didn’t need to check for presence (i.e. because another previous line of code already indicated presence);

- Using get() calls in an

ifstatement and also the body of the statement, when I could declare a variable that calls the get function once; - Using get() calls in nested loops whose logic could have been simplified.

I had to figure out what changes to make by reading my code and thinking more about the logic I wanted to build, but I couldn’t have done that without the profiling data that helped me figure out what was slow.

Conclusion

cProfile is an essential tool in my toolkit as a Python programmer. With cProfile, I can find:

- What parts of my program take the longest to run;

- What sub-functions contribute to a function taking a long time;

- Functions being called more times than is necessary.

My workflow for writing programs where speed is essential is to write a program, test with cProfile, make changes, test again, and keep going until I reach my goal.

With that said, cProfile doesn’t tell me how to write performant code. Rather, it gives me information that I can use to better evaluate my program. For instance, if something takes way too long even after simplifying the logic, I may need another algorithm. Maybe I need another data structure. Maybe I need to pre-compute information.

Profiling can help me figure out when this is necessary; if, after optimization, a function still doesn’t meet performance requirements, I will start to research different ways to approach a problem.

Addendum: Function names

Some functions in the flame graph will have names of functions in the standard library, like:

:0(method 'lower' of 'str' objects)

This is because cProfile profiles all function calls. In the case above, cProfile is telling us about the invocation of the lower function on a string object. Some functions may also not appear in your code but will appear in the profile, like:

:0(built-in method _sre.ascii_tolower)

These are low-level functions. I haven’t really had a need to touch these in my profiling yet.

In addition, dependencies you call will be in the graph. This is a useful tool! Because dependencies are mentioned, you can see how they contribute to the overall runtime of your program.